Training is a major line item for every call center – and one that delivers real ROI. But in a vacuum, training can only go so far.

Equally critical is knowing whether that investment is actually paying off. Without a clear view of how to measure training effectiveness, performance and ROI remain unclear as companies continue to spend.

The trouble that most call center training runs into is that traditional assessment methods don’t measure what matters. Knowledge checks and quizzes capture short-term recall. Class participation signals engagement. Completion rates demonstrate endurance. None of these are indicators of readiness for live calls.

These proxies leave operations leaders with little insight into whether their dollars are being well spent. And that lack of insight has consequences – not just for performance, but also for how an organization allocates its most precious resources.

Why it’s important to track if training is working

As a call center leader, every dollar you request is scrutinized. Finance expects airtight justifications for budget increases and clear explanations for losses. Without a reliable way to measure training effectiveness, you won’t be able to provide useful context.

The most obvious way to track training efficacy is to spot underperformers. Assessment can reveal whether an agent just needs a bit more investment to succeed or whether they’re unlikely to meet the standard – regardless of how much support you provide.

But the other reason to assess performance is that, often, agents reach readiness sooner than expected. When that happens, the costliest mistake is to keep them in training for another month. It’s expensive, it’s demotivating, and it uses up precious resources that could be better directed to the team members who actually need them.

A 4-step framework to analyze training to analyze training performance

Every organization has their own approach to assessing their performance efficacy, but certain steps are essential to capture. Based on what we’ve seen from our customers at ReflexAI, this is the general framework we recommend:

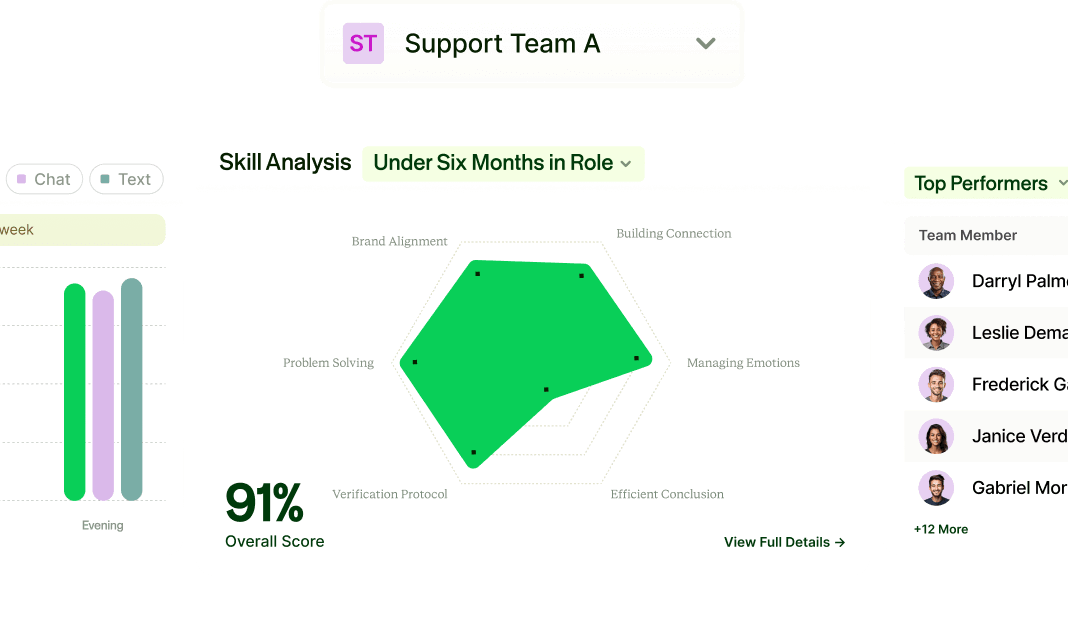

1. Analyze 100% of interactions

Leaders should be looking at the performance of every person on the line, across the skills and protocols that matter most to the organization. If you rely solely on spot checks or small sample reviews, you’ll miss the big picture.

2. Identify gaps and weaknesses

With a complete picture of performance, it becomes much easier to identify deficiencies in your training program. For instance, maybe you notice that protocol compliance is strong in training but consistently breaks down when agents are on live calls. This disconnect is a clear signal that something in your current design isn’t working—and it gives you the data you need to allocate your resources where they’ll have the greatest impact.

3. Target training using specific scenarios

Once you’ve identified the gaps, you can target your training to optimize for those situations. For this, AI-powered simulations are the gold standard. But even if you use a different approach, the core principle remains the same: put agents into realistic scenarios and evaluate their ability to navigate them. Broadly, there are three ways to structure those assessments:

- Assess specific components. With this approach, you’re zooming in on a few key skills – such as de-escalation, empathy, or policy application – and evaluating how well an agent can execute in those areas. This can help your agents sharpen skills where they might be weak or lack confidence.

- Progressive difficulty. Here, agents are exposed to increasingly complex situations, ranging from straightforward problems with cooperative customers to multi-layered situations with angry callers. This approach is great for preparing agents for a wide spectrum of scenarios and can help trainers spot where performance starts to decline.

- Training snapshots. With this approach, you assess agents at multiple checkpoints throughout the training. The goal is to see not just where they are, but also whether they’re on a trajectory that predicts long-term success.Typically, trainees fall into 3 groups: Highly likely to succeed, likely to succeed with support, and unlikely to succeed. The bulk of your resources should go into the second group.

4. Create feedback loops

Assessing your training isn’t a linear, one-time process. It’s a continuous loop where data-driven insights flow back into your training design and updates. We recommend organizations focus on building two feedback loops:

- Quantitative feedback. Use performance data to compare how trainees perform on their first weeks of live calls against their training assessments. With the right tools, you can track the same skills across training and production to spot disconnects quickly. For example, you might notice that a skill that looked solid in roleplays or simulations isn’t being applied as well during live calls.

- Qualitative feedback. Data alone can miss what supervisors see every day on the floor. That’s why there needs to be structured, ongoing communication between supervisors of live agents and training teams. This ensures critical insights, such as recurring skills that need extra coaching, don’t get lost – and that training can evolve with operational realities. We recommend incorporating structured supervisor feedback for every other cohort to avoid constant updates to the training program.

4 best practices to measure the effectiveness of call center training

No matter your assessment approach, a few guiding principles will help you get the clearest signal of whether training is working:

1. Don’t grade on a curve

Always measure agents against the standards of readiness that you’d want them to have on a live call. Yes, people won’t meet that bar early in their training, but lowering the standard at different checkpoints creates false signals. It can mask true areas of growth, or worse, green light agents who aren’t yet equipped to deliver a positive customer experience.

2. Assess across a range of dimensions

While the specific number of dimensions you assess will depend on your organization’s needs, you want enough to capture the full picture, but not so many that the data becomes unmanageable. You also don’t have to evaluate every skill at every checkpoint. But over the course of training, make sure you’re gathering enough evidence across each area of your rubric.

3. Watch for assessment drift

More experience doesn’t always equal better calibration. In fact, senior assessors are often at risk of being too harsh and unintentionally making agents look less ready than they actually are. As a result, your team members may spend more time in training than necessary and waste valuable resources. To keep assessments fair and consistent, consider these approaches:

- Option #1: Use AI tools. AI isn’t flawless, but it’s more consistent than human judgment. Automated scoring can significantly reduce bias and maintain reliability at scale.

- Option #2: Change up assessors. Having a different trainer assess the same agent can prevent one person’s bias from skewing results.

- Option #3: Have multiple assessors. Some call center training assigns multiple evaluators to each conversation or attempts to calibrate every assessor against a shared standard. In practice, this is resource intensive and rarely worth the effort since perfect calibration is a myth.

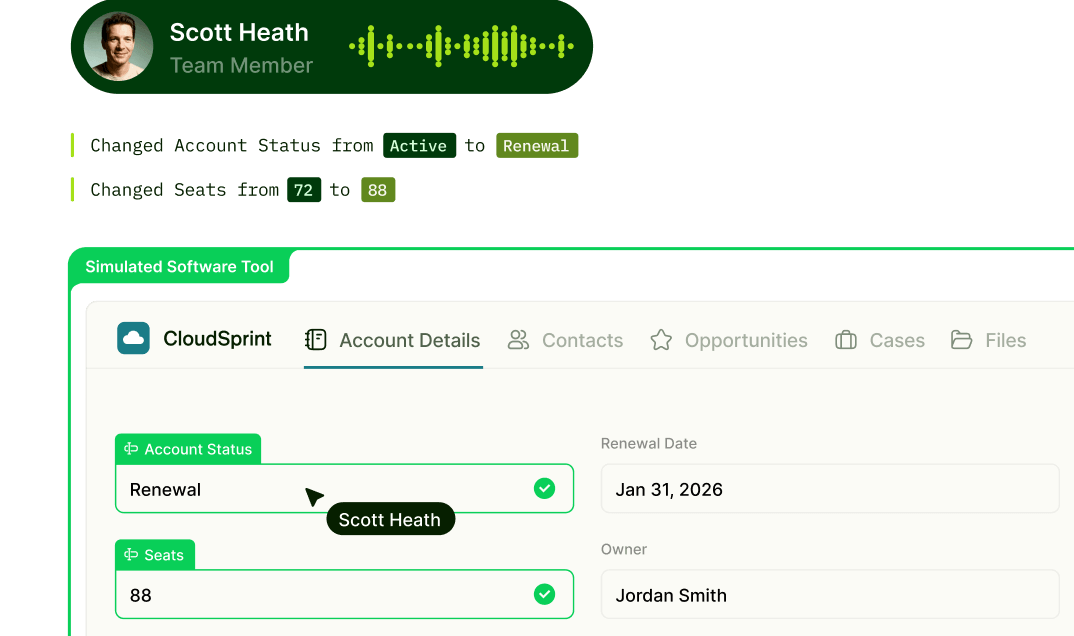

4. Evaluate software use alongside conversation skills

Training should measure not just what’s said, but how tools are used. At ReflexAI, one of our customers found that if data isn’t inputted correctly within the first 60 seconds, calls get routed to the wrong team and conversion drops to 10%. In other words, call center success relies on how conversation and tools are used together – so it’s critical to measure the latter as well.

Measure your training effectiveness with ReflexAI

Make every training dollar go further by investing in an airtight assessment process. With ReflexAI, you can track and analyze every interaction during the training process to deliver actionable insights. Want to learn more?